Opening the Gate of Text: Embeddings and the Truth of Meaning

Attention is all you need.

In 2017, Google dropped a paper that changed everything: “Attention Is All You Need.” It introduced the transformer, a model that would go on to become the backbone of almost every major Gen AI system today — from ChatGPT to DALL·E.

Sounds like science fiction? Maybe. And today, we’re opening the Gate to understand how it works.

The G̶a̶t̶e̶ GPT:

Generative AI (GenAI) is everywhere- GenAI will revolutionize the world, GenAI is the future, and so on. But what exactly is GenAI, and how is it different from traditional tools?

Generative AI is a combination of two words: generative and artificial intelligence. Unlike traditional search engines like Google, which return relevant links or documents based on your query, GenAI generates new content in response to your input — whether that’s text, code, images, or more.

This capability is powered by Large Language Models (LLMs) like ChatGPT or Claude, which can handle millions of possible user prompts and generate coherent, context-aware outputs. But how does a model predict what word (or even what letter) should come next?

The answer lies in the architecture and training method. GPT stands for Generative Pre-trained Transformer:

- Generative: The model generates new content, rather than just selecting from existing data.

- Pre-trained: The model is trained in advance on vast amounts of text data, allowing it to learn the structure and patterns of language.

- Transformer: This refers to the neural network architecture that powers GPT models, designed to handle sequences of data with mechanisms like self-attention.

How Transformers Work: Step by Step

Transformers are the foundation of Large Language Models like GPT. At their core, transformers take a sequence of tokens (a sentence, prompt, etc.) and generate output tokens based on learned patterns and relationships between words.

“In alchemy, the process matters. You can’t skip steps, or the result fails. It’s the same with transformers.”

Let’s break down how this works-step by step.

- A token might be a word, sub-word, or even a single character.

- These tokens are then converted into numbers (usually token IDs) so the model can process them computationally.

Here’s how can use different models to see how tokens are generated:

import tiktoken

enc = tiktoken.encoding_for_model("gpt-4o")

text = "Selim Bradley is a homunculus"

tokens = enc.encode(text)

print("Tokens:", tokens)Here what you get as output:

2. Vector Embeddings

Once we have numerical tokens, they’re mapped into high-dimensional vector space using embedding layers.

This is crucial: tokens alone are just IDs, but embeddings give them meaning by capturing their semantic relationships.

Ed uses Amestrian alchemy and Mustang is a master of Fire alchemy. What is “steel”, “iron” to Ed is what “fire”, “combustion” to Mustang. The distance in the 3D space to the following entities mentioned above is somewhat similar, that’s how the relationship and importance of each word is recognized by the vector encodings.

from openai import OpenAI

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

text = "Edward killed the homunculus"

response = client.embeddings.create(

model="text-embedding-3-small",

input=text

)

print("Embedding length:", len(response.data[0].embedding))Why it’s important:

Without embeddings, a model would treat each word in isolation. Embeddings let it understand context and similarity-so instead of translating “cat” as “chat” (French) only because it looks similar, the model understands it means a small animal that drinks milk.

3. Positional Encoding

Transformers have no built-in sense of order — they process all tokens in parallel. So we must explicitly encode the position of each token in the sequence.

Example:

- “Ed killed homunculus” vs. “Homunculus killed Ed”

- Same tokens, different meanings.

To preserve this order, we add a positional encoding vector to each token embedding. This helps the model distinguish between different sequence structures.

4. Multi-Head Attention

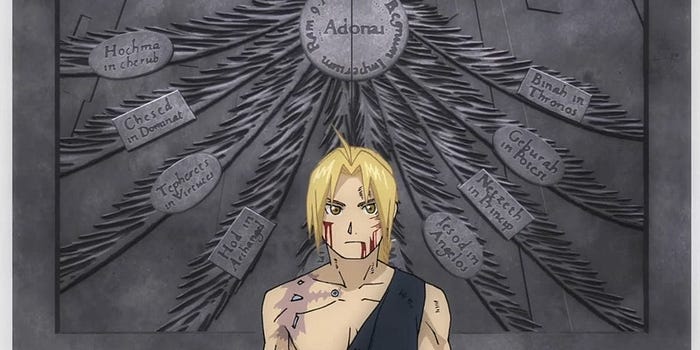

The gate of truth V/s The gate of the central

Same word can have different meanings when used with certain words like: This is where the real magic happens. Here when gate is used with truth, we know it’s a reference to supernatural gate to the other world, but we use gate with the central we know it’s the physical door used to enter in the palace.

This is where the real magic happens. Here when gate is used with truth, we know it’s a reference to supernatural gate to the other world, but we use gate with the central we know it’s the physical door used to enter in the palace.

What is Self-Attention?

For each token, the model asks:

“Which other tokens in this sequence are important to me?

Each token generates:

- Query (what it’s looking for)

- Key (what it contains)

- Value (the actual content)

By comparing queries and keys, the model calculates attention weights, and then uses those to combine the values — creating a context-aware representation of each token.

What is Multi-Head Attention?

Instead of doing this once, the model performs multiple attention operations in parallel (each with different weight matrices). This helps it capture multiple types of relationships simultaneously — like syntax, sentiment, or topic.

5. Training with Loss and Backpropagation

Once the model generates an output token, it compares it to the expected token. This comparison produces a loss value — how far off the prediction was.

- This error is backpropagated through the network.

- The model adjusts its weights slightly to improve next time.

- This process repeats across millions of examples, gradually reducing the loss.

Alchemist’s Rule:

No transformation is perfect on the first try. Like Edward refining his technique over time, the model learns by failing and correcting-again and again-until it minimizes error.

Don’t forget Attention is all you need.